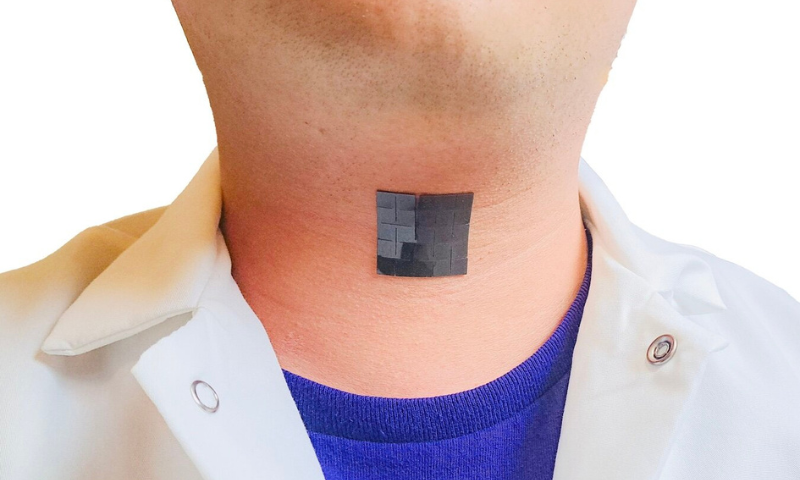

CALIFORNIA: Researchers at the University of California have designed a self-powered throat patch that uses machine learning to translate muscle movements into speech, potentially revolutionizing communication for persons without vocal cords. This advancement is spearheaded by Jun Chen, an assistant professor of bioengineering at the University of California, Los Angeles (UCLA).

Inspired by the strain on his own vocal cords after prolonged lecturing, Professor Chen envisioned a non-invasive device to assist people in speaking without relying on their vocal cords. Collaborating with his colleagues, Chen created a lightweight patch that adheres to the user’s throat, decoding muscle movements into speech with the help of artificial intelligence.

The throat patch is not only resistant to skin sweat but also generates electricity from the user’s muscle movements, eliminating the need for a battery. The details of this pioneering device are outlined in a study published in the prestigious Nature Scientific Journal.

Weighing a mere 7.2 grams, the patch consists of five thin layers designed to respond to the subtle movements of throat muscles. As the wearer attempts to pronounce a phrase, these materials generate electrical signals that machine learning technology can interpret as speech. The patch’s outer layers are crafted from soft, flexible silicone, while the central layer, composed of silicon and micromagnets, creates a magnetic field that varies with muscle movements. The remaining middle layers contain coils of copper wire, converting changes in the magnetic field into electrical impulses.

In the experiment detailed in their study, Chen and his team trained a machine-learning algorithm by feeding it electrical impulses from the patch. Eight participants were asked to repeat five short phrases 100 times each, enabling the algorithm to associate specific muscle movements with corresponding phrases. The algorithm demonstrated a remarkable 95% accuracy in translating these electrical impulses into spoken phrases, whether voiced or “voicelessly” articulated.

While the study’s findings are promising, Chen acknowledges several limitations. The initial testing involved only eight participants, none of whom had speech disorders, and was confined to a limited number of phrases.